A supply chain attack is where an attacker seeks to exploit an organization by attacking a weaker link in the supply chain which the organization depends on. No organization writes 100% of the code they use in their operations. There exist many external dependencies like open source projects, standard libraries, third-party vendor products, and the hardware these all run on. Each of these vectors can be attacked and so too can the organizations who are dependent on them. There are countless real-world examples of supply chain attacks where organizations suffer significant damage, including NotPetya (2017), the Target data breach, and the Solarwinds hack.

Motivation

Why am I doing this?

- I see supply chain attacks as an interesting attack vector with a relatively low bar of technical knowledge required to carry one out. Note that this only applies to specific types, as “supply chain attack” is a very broad topic.

- I want to explore how an attacker could inject malicious code into an open source project and what exactly the impact could be.

- I want to share this investigation so I can flesh out my half-formed thoughts on the subject.

In this post, I’m looking into what the process of attacking the supply chain could look like in a Ruby on Rails application. In particular, Rails apps use external libraries in the form of Gems. I want to see how an attacker could inject a Gem with malicious code to exploit a dependent app.

All the code for this post can be found under two repos on my GitHub: the gem and the Rails app.

The Application

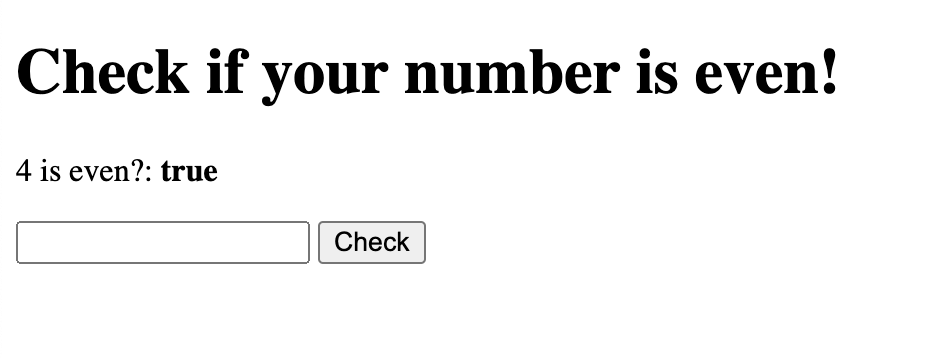

First, we start with a simple Rails application that takes input in a text field and checks if the value is an even number. But since checking an even number is “difficult”, we decide to use an existing Gem called evil_gem to do the work for us.

We add it to our Gemfile

|

|

and then run bundle install. Initially, the gem looks fine! It’s simply checking if the input is an even number:

|

|

Then our controller for this page is

|

|

So, except for the error thrown when the input is not a number, all is well!

The Malicious Actor

But now suppose a malicious actor comes along, call her Mallory, and opens a pull request on evil_gem. Or phishes the maintainer and takes over their account. Suddenly, Mallory can update the code this Rails application is dependent on.

Using a crap justification like “using OS commands and capturing the output is more performant than Ruby’s native even?, Mallory updates the Gem.

|

|

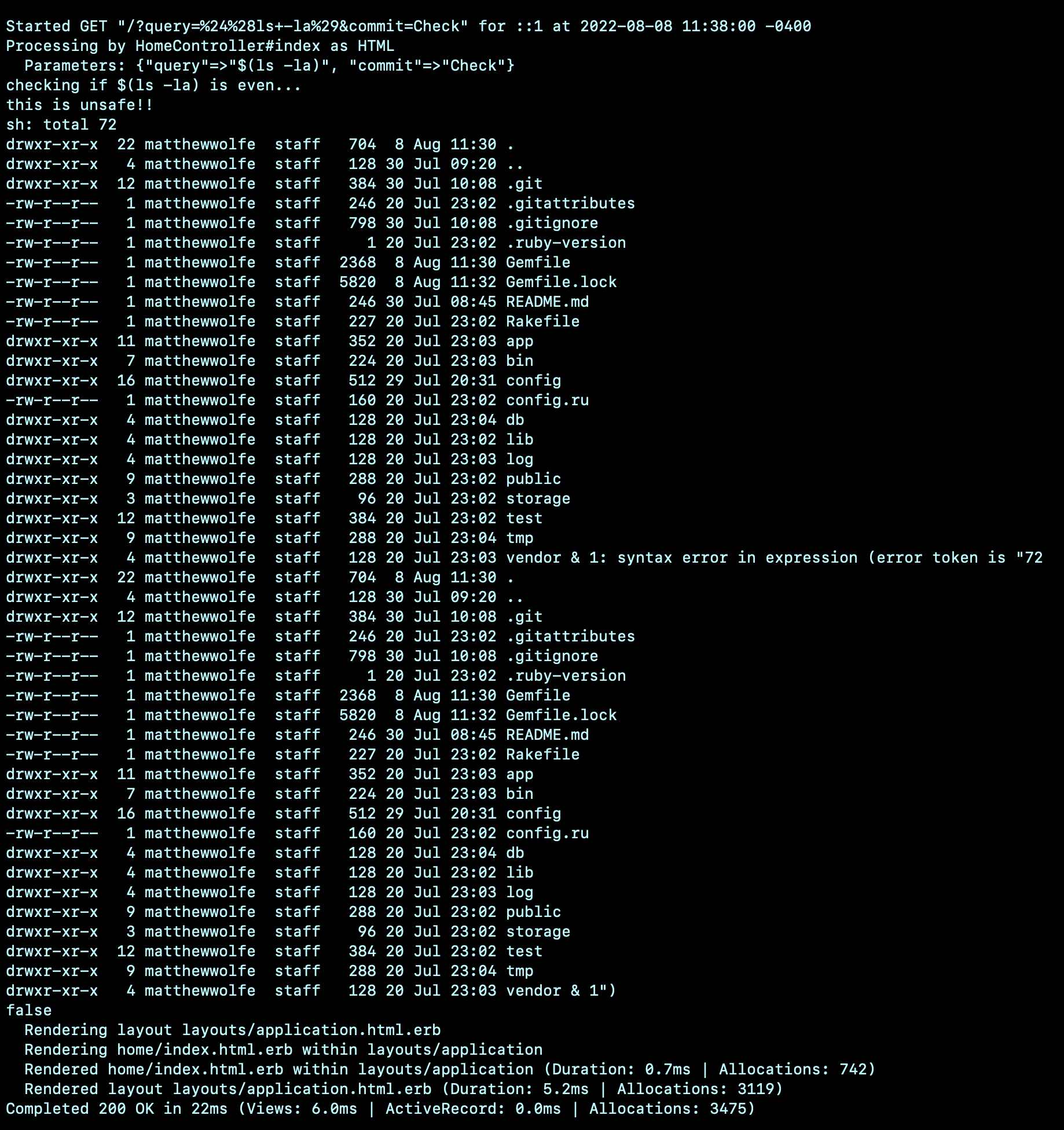

Uh oh. Passing user input into backticks in Ruby is bad! The input can escape the quotes and execute arbitrary commands on the system running the app.

Now that the Gem is updated, the application is not yet vulnerable. The application owner must update the Gem in the project. In this case, the evil_gem’s version is not locked so just running bundle update will pull in the new malicious code.

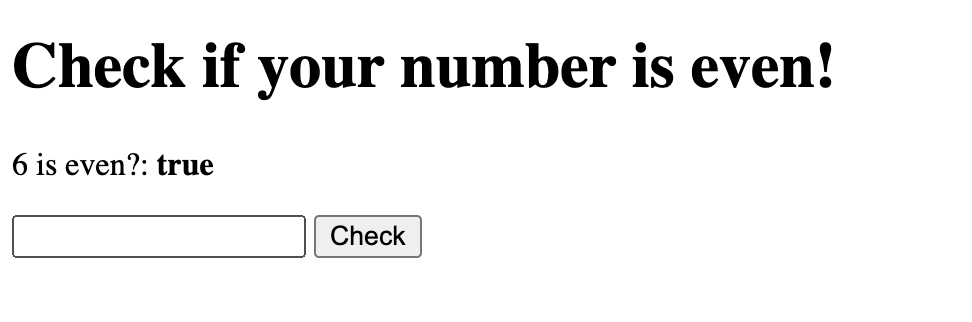

It looks like the app still works:

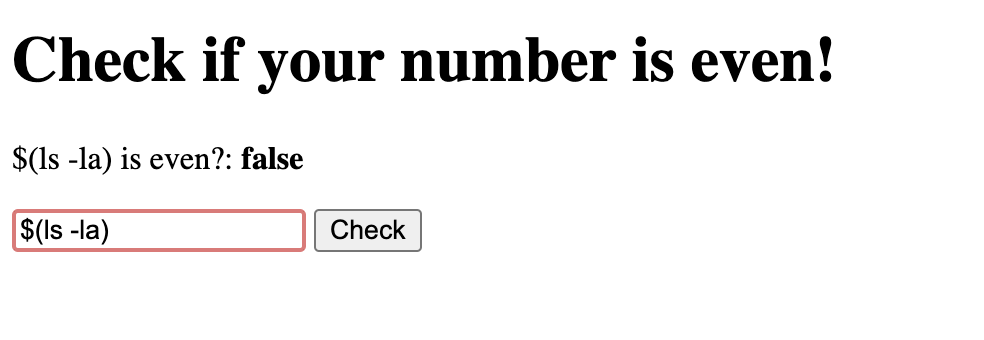

But now let’s try a different input:

Technically, that is correct. And there is no indication anything went wrong on the user’s side. But looking at the server’s console we see the output of ls! The user has successfully injected malicious code into our application via an external dependency and can now use it to exploit the main application. This could be used to steal user data, get access to the web servers, and a lot more.

Prevention

How can this be prevented?

- use a

Gemfile.lock. Lockfiles ensure your dependencies don’t get updated without your explicit permission. If you’re secure today, you’ll be secure tomorrow. - Manually review every line of code when you update dependencies.

However, for the latter suggestion, that isn’t practical for most organizations! This would require a certain baseline knowledge of software security and an understanding of how most of that dependency works. This poses a problem because dependencies will often be updated with security fixes of known vulnerabilities so we can’t simply skip updates forever.

Additionally, security issues can still slip by those who have this security and context-specific knowledge. For instance, we might assume in a large open source project, like Rails, that there are already people looking over this code who meet these requirements. But some smaller projects are maintained by much smaller groups who may not know better. Moreover, malicious code can even be injected into the Linux kernel source so there is no guarantee that code is safe.

Next Steps

The next thing I want to learn more about is how to write software that is safer from attacks on the open source supply chain. Minimize dependencies? Just assume breach and work from there? What are the best practices? I think the level of security an organization must have in this regard depends on proper threat modeling, but balancing security and business needs is hard to do.

I think the important takeaway from the investigation is this: You might have the best phishing detection and account takeover protection for your own employees, but that doesn’t protect the open source developers or vendors with access to the critical code you depend on.